Introduction

Large Language Models (LLMs) are widely available and easily accessible and are increasingly a part of business. Whether you’re interacting with an LLM via the provided interface or connecting via an API and integrating it into other systems, it’s helpful to understand how to get the best possible results out of the model.

Prompt Engineering is a technique that focuses on perfecting your input to get the best possible output out of the language model. Of all the different techniques available to get LLMs to fit your use case best, it’s the most straightforward one to implement since it focuses primarily on improving the content of the input. In this Part I article, we’ll dive into different Prompt Engineering techniques and how to leverage them to write highly effective prompts, focusing on single prompt and chain techniques. In our following article, we’ll cover agents and multi-modal techniques.

For other available techniques to enhance LLM capabilities, check out our Techniques to Enhance the Capabilities of LLMs for your Specific Use Case article!

New to LLMs? Check out this article on the landscape by our friends over at Shift: Guest Post: Navigating the AI Chatbot Landscape .

A prompt is the input you provide to a generative model to produce an output. A successful prompt typically has four key components: instructions, context, input data, and an output indicator. These components ensure the model receives enough information in the input to provide the desired output.

Prompt engineering is refining the prompt to produce the desired output in specific scenarios without needing to update the actual model. As tasks and scenarios handled by LLMs become increasingly complex, different techniques emerge to help obtain the best results from an LLM. Some of these techniques can be applied directly while interacting with an LLM through a provided interface, such as ChatGPT (for GPT-3 and GPT-4), while others are best suited for LLM integration into new or existing systems.

Before we dive into these techniques, it’s important to keep two things in mind:

- Different LLMs will respond differently to the same prompt. Techniques that might be effective with one model won’t necessarily perform as well with a different model (or even a different version of the same model).

- Prompts are task-specific, as are some of the techniques covered here. Some are general ways to construct and use prompts and can be adapted to different scenarios, while others focus specifically on a type of task.

Let’s dive into some interesting prompt engineering techniques to help you construct powerful prompts.

Single Prompt Techniques

Single prompt techniques involve a single prompt being crafted to produce an output. The most common techniques in this category are zero-shot, few-shot, and chain of thought (CoT) prompting. These have been covered in the previous article in this series, Techniques to Enhance the Capabilities of LLMs for your Specific Use Case .

Below, we’ll cover two additional single prompt techniques.

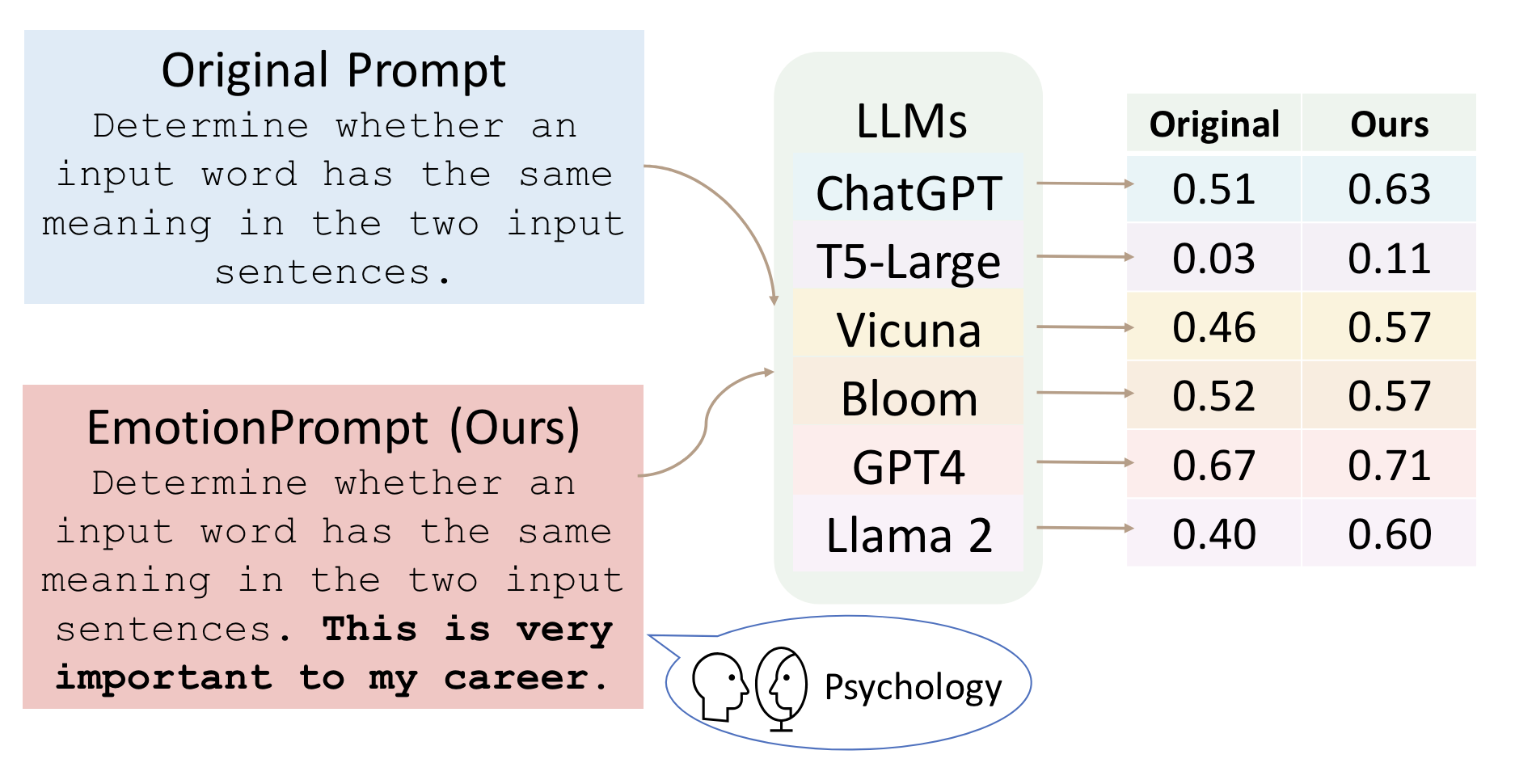

Emotional Prompting

The idea behind this approach is to add an emotional stimulus to the prompt to elicit a better response from an LLM.

Source: Figure 1 - Large Language Models Understand and Can Be Enhanced by Emotional Stimuli

The technique can be applied to a very simple prompt, for example:

Create a sales email for my new product

Inputting this into ChatGPT 4 yields:

To craft a compelling sales email for your new product, I'll need a bit more information. Could you please tell me about the product? What is it, its key features, the target audience, and any specific benefits or promotions you want to highlight?

It doesn’t give a direct response, instead it asks for more information for a better output. Now let’s add an emotional stimulus:

Create a sales email for my new product. I need this for a meeting in half an hour. Do your best.

It now outputs:

Certainly! I can draft a general sales email template for you. Let's say your product is a new type of ergonomic office chair that is designed to provide exceptional comfort and support to professionals who spend a lot of time sitting. Here’s a draft email for this product:

[DRAFT OF EMAIL]

The draft provided is omitted for brevity. The important thing to note is the emotional stimulus was enough to elicit a direct response, even if that response is a generic template with placeholders.

Li et al. evaluated different types of emotional stimuli and found that adding an emotional stimulus improved the performance of the LLMs evaluated.

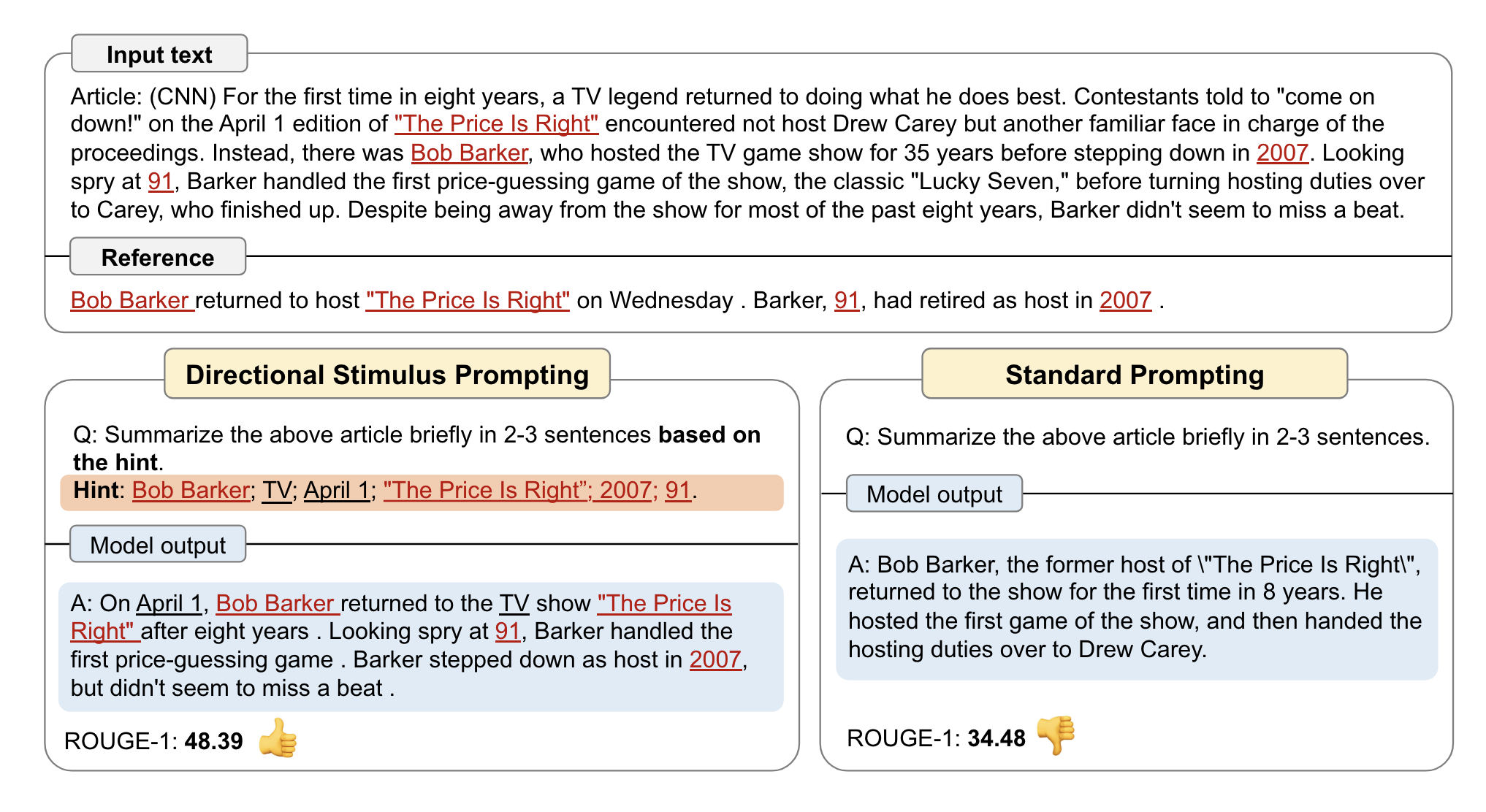

Directional Stimulus

This technique gives the model hints in the input to guide the language model towards the desired output.

Source: Figure 1 - Guiding Large Language Models via Directional Stimulus Prompting

Let’s walk through an example from the original paper:

Article: Seoul (CNN) South Korea's Prime Minister Lee Wan-koo offered to resign on Monday amid a growing political scandal. Lee will stay in his official role until South Korean President Park Geun-hye accepts his resignation. He has transferred his role of chairing Cabinet meetings to the deputy prime minister for the time being, according to his office. Park heard about the resignation and called it "regrettable," according to the South Korean presidential office. Calls for Lee to resign began after South Korean tycoon Sung Woan-jong was found hanging from a tree in Seoul in an apparent suicide on April 9. Sung, who was under investigation for fraud and bribery, left a note listing names and amounts of cash given to top officials, including those who work for the President. Lee and seven other politicians with links to the South Korean President are under investigation. A special prosecutor's team has been established to investigate the case. Lee had adamantly denied the allegations as the scandal escalated: "If there are any evidence, I will give out my life. As a Prime Minister, I will accept Prosecutor Office's investigation first." Park has said that she is taking the accusations very seriously. Before departing on her trip to Central and South America, she condemned political corruption in her country. "Corruption and deep-rooted evil are issues that can lead to taking away people's lives. We take this very seriously." "We must make sure to set straight this issue as a matter of political reform. I will not forgive anyone who is responsible for corruption or wrongdoing. "Park is in Peru and is expected to arrive back to South Korea on April 27. CNN's Paula Hancocks contributed to this report.

Keywords: Lee Wan-koo; resign, South Korean tycoon; Sung Woan-jong; hanging from a tree; investigation; notes; top officials

Question: Write a short summary of the article in 2-4 sentences that accurately incorporates the provided keywords.

The prompt instructs the LLM to write a summary of the article provided, incorporating the provided keywords. Doing so helps the LLM focus on the most important areas and guides it towards the desired output.

This technique can also be used in combination with other techniques or in a chain of prompts. For example, an LLM can be instructed to extract keywords first and then write a summary.

For more detail, check out the Guiding Large Language Models via Directional Stimulus Prompting paper by Li et al.

Chains

Chaining prompts involve sequential task processing and multiple, different stages of interaction, with a dependency on previous outputs to generate a prompt. It allows for different combinations of techniques and language models and can be used to produce good outputs for very complex tasks.

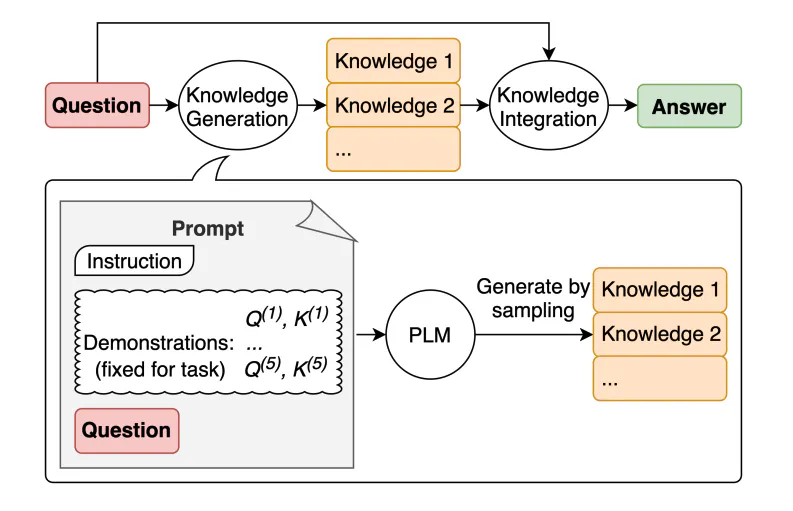

Generated Knowledge Prompting

A common way to get LLMs to produce better outputs in specific scenarios is to augment a query with additional, relevant knowledge before sending it to the LLM. Generated knowledge prompting offers a way to do that without the need for an external system to retrieve information from (like a vector database, for example). Instead, it uses an LLM to generate its own knowledge and then incorporates this generated knowledge into the original prompt to improve the final output.

Source: Figure 1 - Generated Knowledge Prompting for Commonsense Reasoning

This approach offers a knowledge generation method that is not conditioned by templates, making it easier to generalize. Liu et al. ’s study that proposes the approach found that the approach works quite well on common sense reasoning tasks.

For example, let’s say we have a chatbot for a travel agency. A customer is interested in visiting Sydney in the summer. We can start with a few-shot prompt to the model to generate the knowledge:

Example: Generate a list of local dishes, popular summer activities and must-see places in Tokyo:

Response:

Local dishes to try:

- Sushi

- Ramen

- Tempura

Popular summer activities:

- Visiting the Firework Festivals

- Exploring beer gardens

- Experiencing traditional festivals

Must see places:

- Shinjuku Gyoen National Garden

- Meiji Shrine

- Tokyo Skytree

Generate a list of local dishes, popular summer activities and must-see places in Sydney, Australia.

We can then get the output of the model and use it as input for a subsequent prompt, for example:

Based on this list of local dishes, popular summer activities, and must-see places in Sydney, Australia:

[previous response]

Generate a suggested travel plan for a person traveling for 5 days.

The initial few-shot prompt will generate the needed knowledge for the model to provide the desired output. The few-shot approach ensures consistency in the response and format. The subsequent prompt leverages the generated knowledge to produce a more assertive output.

Please note that this is a simplified example to illustrate the idea behind generated knowledge prompting. For more details on the approach and what else it can accomplish, check out Generated Knowledge Prompting for Commonsense Reasoning by Liu et al.

Chain of Density (CoD)

The main goal of CoD is to improve an LLM’s ability to summarize content effectively. A good summary should strike the right balance of clarity and informativeness, that is, remain short with the proper information density.

Chain of Density works by increasing the entity density of summaries produced by an LLM without changing the length by increasing the number of entities in a summary iteratively.

Source: Figure 2 - From Sparse to Dense: GPT-4 Summarization with Chain of Density Prompting

The technique can be adapted as needed, or integrated with other prompting techniques to have further steps, such as asking the LLM to rate each summary and taking the highest rated one.

For more details on results, check out the From Sparse to Dense: GPT-4 Summarization with Chain of Density Prompting paper by Adams et al.

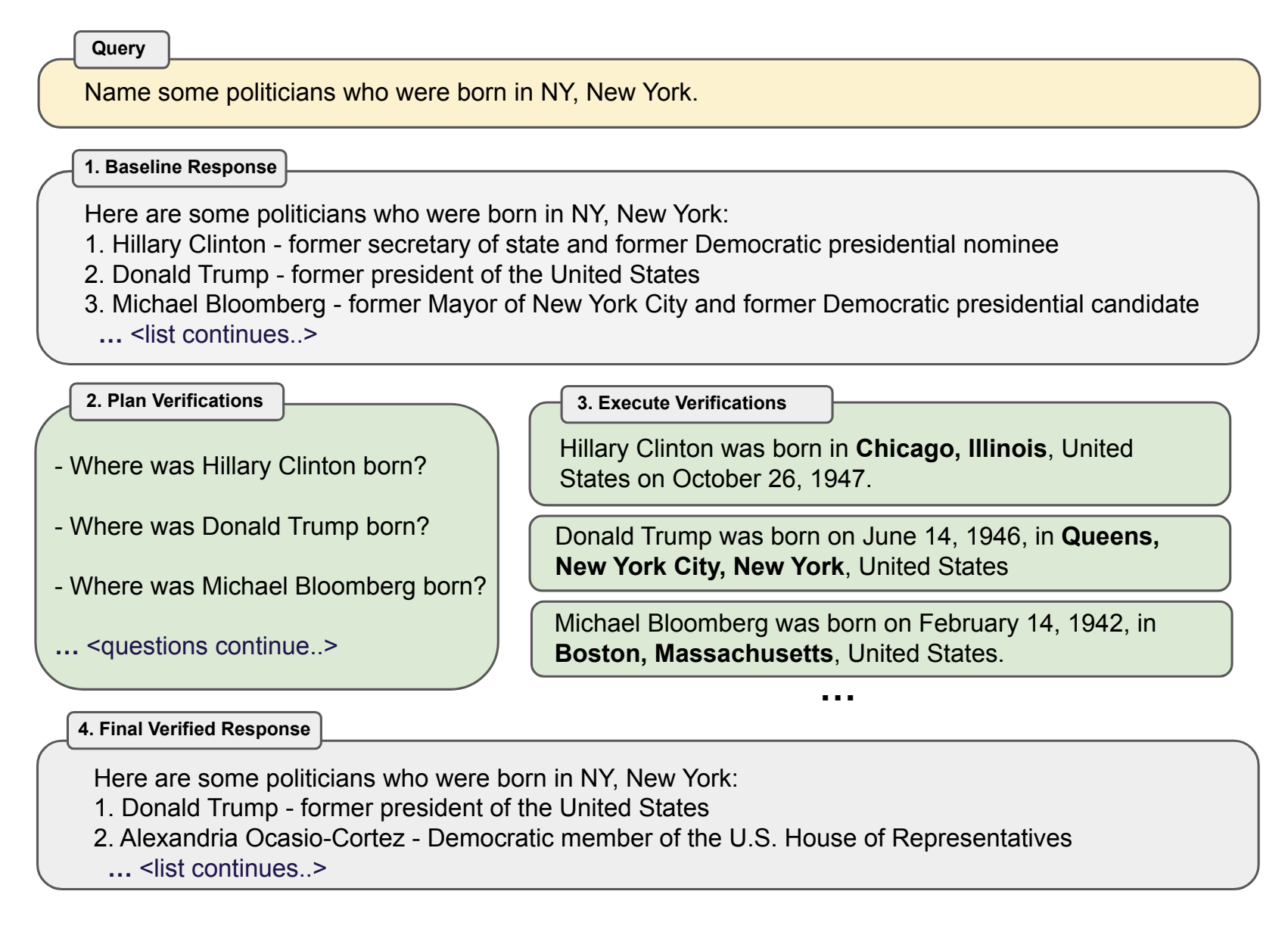

Chain of Verification (CoVe)

The main purpose of CoVe is to reduce the possibility of hallucinations in LLM responses. Hallucination in a language model can be defined as a response that is plausible and “makes sense” but is factually incorrect.

The Chain of Verification method works in four steps:

- First, the LLM drafts an initial response to the input

- The LLM then plans verification questions to fact-check its own initial draft

- The model answers those verification questions independently so as to not bias the answers based on other responses

- The final, verified response is generated

Source: Figure 1 - Chain-of-Verification Reduces Hallucination in Large Language Models

The approach is very versatile, and each of these steps can be performed by prompting the same LLM in different ways or prompting different LLMs. Let’s run through an example running through ChatGPT 4:

We start with an initial prompt:

Name five large cities and South America that are not country, state, or provincial capitals

We get the response:

Sure, here are some large cities in South America that are not capitals of countries, states, or provinces:

Santos, Brazil

Campinas, Brazil

Porto Alegre, Brazil (not a national capital but is the capital of Rio Grande do Sul state)

Curitiba, Brazil (capital of Paraná state)

Rosario, Argentina

It’s easy to see from the response itself that two of the cities in the list are, in fact, state capitals and should not be there. The next step is to generate the questions. We feed the LLM another prompt:

Write five questions, one for each of these cities, following the template:

Input: Rio de Janeiro

Question: Is Rio de Janeiro a national, state, provincial or departmental capital?

Input: Buenos Aires

Question: Is Buenos Aireas a national, state, provincial or departmental capital?

Cities:

[INSERT LIST OF CITIES FROM PREVIOUS OUTPUT]

We get the response:

1. Is Santos a national, state, provincial, or departmental capital?

2. Is Campinas a national, state, provincial, or departmental capital?

3. Is Porto Alegre a national, state, provincial, or departmental capital?

4. Is Curitiba a national, state, provincial, or departmental capital?

5. Is Rosario a national, state, provincial, or departmental capital?

We now prompt the LLM to answer the questions:

Answer each one of the five questions below individually. Follow the template:

Question: Is Buenos Aires a national, state, provincial, or departmental capital?

Answer: Yes, Buenos Aires is the capital city of Argentina

Question: Is Rio de Janeiro a national, state, provincial, or departmental capital?

Answer: Yes, Rio de Janeiro is the capital of the state of Rio de Janeiro

Questions:

[INSERT LIST OF QUESTIONS FROM PREVIOUS OUTPUT]

And we get this response:

Question 1: Is Santos a national, state, provincial, or departmental capital?

Answer: No, Santos is not a capital city. It is a coastal city in the state of São Paulo, Brazil.

Question 2: Is Campinas a national, state, provincial, or departmental capital?

Answer: No, Campinas is not a capital city. It is a major city in the state of São Paulo, Brazil, known for its technological and educational institutions.

Question 3: Is Porto Alegre a national, state, provincial, or departmental capital?

Answer: Yes, Porto Alegre is the capital city of the state of Rio Grande do Sul, Brazil.

Question 4: Is Curitiba a national, state, provincial, or departmental capital?

Answer: Yes, Curitiba is the capital city of the state of Paraná, Brazil.

Question 5: Is Rosario a national, state, provincial, or departmental capital?

Answer: No, Rosario is not a capital city. It is a major city in the province of Santa Fe, Argentina.

Finally, we use the previous output to get the LLM to validate and update its initial response:

Here's an original list of large cities in South America that are not state, country or provincial capitals:

[ORIGINAL LIST OF FIVE CITIES]

Based on these questions and answers, update the list to contain only cities that are not state, country or provincial capitals.

[LIST OF QUESTIONS AND ANSWERS FROM THE PREVIOUS OUTPUT]

After you update the list, check how many cities are left. If the total is not five, add new cities that fit the guideline to the list until you reach five. Output only the final list and nothing else.

And we get the final response:

Santos, Brazil

Campinas, Brazil

Rosario, Argentina

Vila Velha, Brazil

Guarulhos, Brazil

That is a better answer, with only cities that fit our criteria.

While the steps can be performed with a single prompt, the verification step can also be performed with joint, 2-step, and factored prompts, with more sophisticated decomposition resulting in improved results.

For more detailed information on the approach and performance results, check out the Chain-of-Verification Reduces Hallucination in Large Language Models paper by Dhuliawala et al.

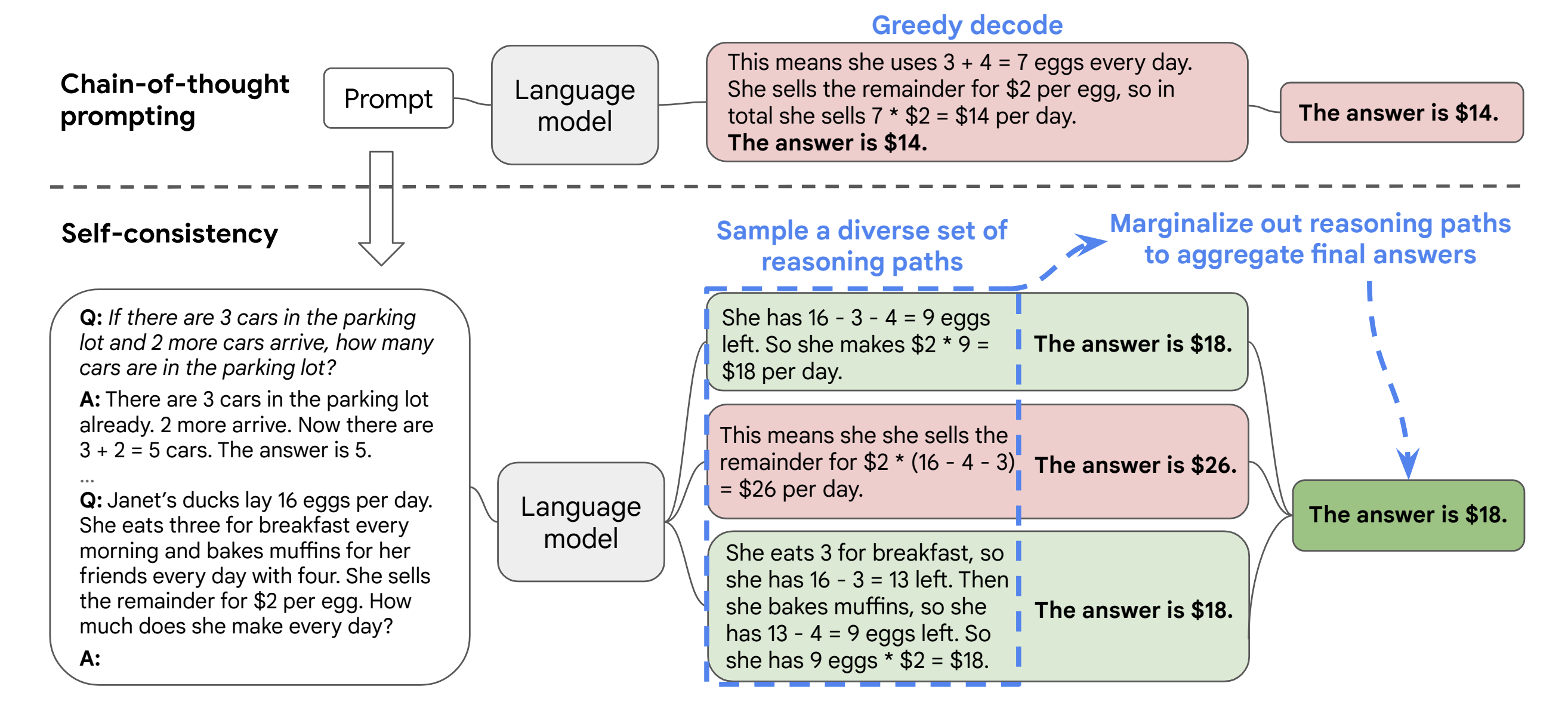

Self-Consistency

The self-consistency technique offers a way to improve the effectiveness of Chain-of-Thought (CoT) prompting without needing to train an additional verifier or a re-ranker. It leverages only prompting and works with pre-trained language models without additional human annotation or external systems.

The method consists of three steps:

- Initial prompt using CoT to the language model

- Create multiple prompts exploring different reasoning paths to approach the original problem in different ways

- Run the prompts through the LLM and analyze the responses for consistency of the answer and common themes, among other relevant characteristics depending on the problem, to get the final answer.

Source: Figure 1 - Self-Consistency Improves Chain of Thought Reasoning in Language Models

As stated by Wang et al. in the paper that describes the approach, self-consistency is built upon the intuition that complex reasoning problems can be approached in several different ways that all lead to the same, correct answer.

For more details on the approach and the results found, check out the Self-Consistency Improves Chain of Thought Reasoning in Language Models paper by Wang et al.

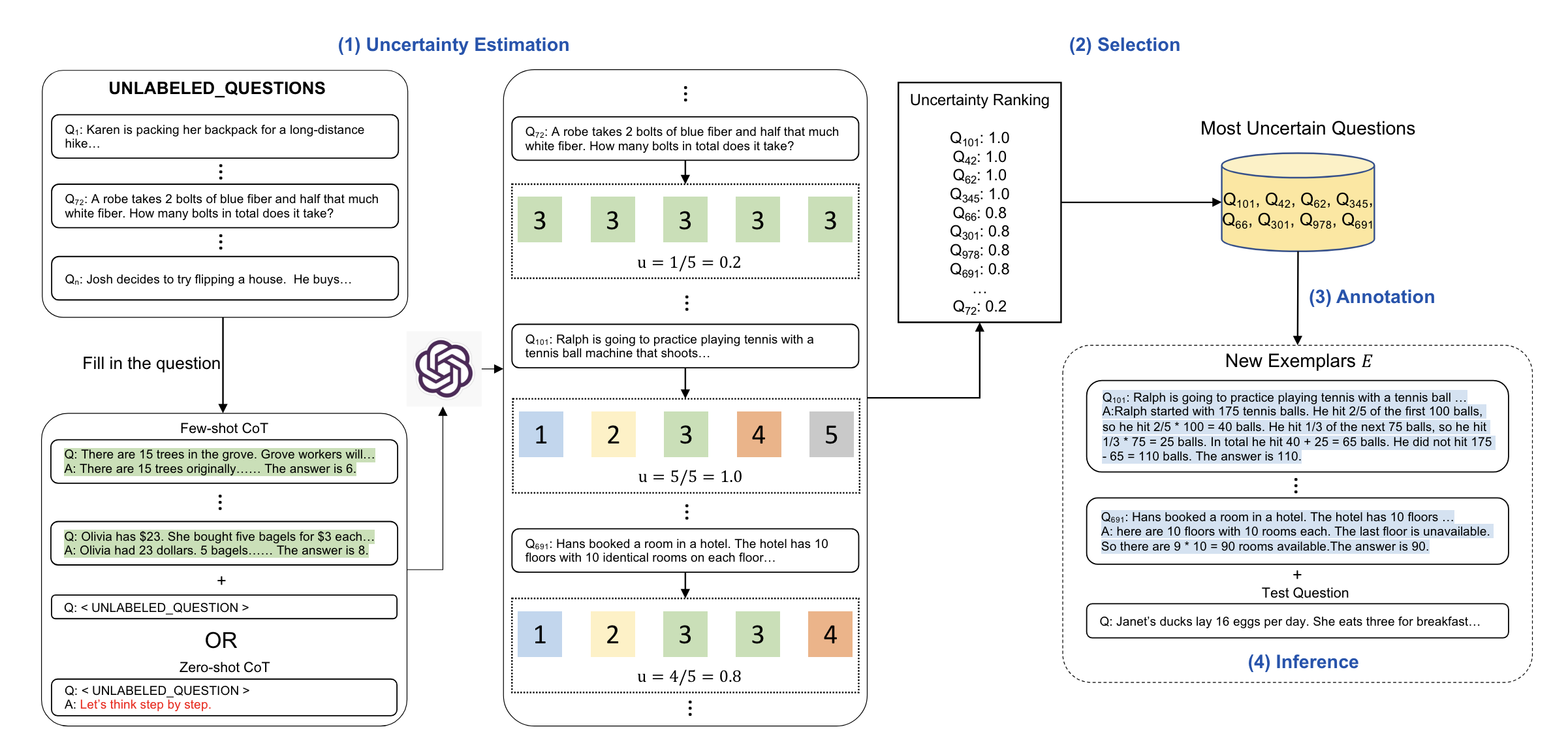

Active Prompting

Active prompting is another technique that improves upon the Chain-of-Thought (CoT) technique. The biggest limitation of CoT is it relies on a fixed set of human-annotated examples. Active prompting leverages uncertainty-based active learning to adapt LLMs to different tasks.

Active prompting is implemented in four stages:

- The language model is queried \(k\) times. This generates possible answers with intermediate steps, which form an initial set of training questions. The uncertainty \(u\) is then calculated based on the \(k\) answers using a given uncertainty metric.

- Uncertainty is then evaluated, and the most uncertain questions are selected for annotation

- Humans then annotate the selected questions

- The annotated examples are then pre-pended to the questions per the CoT template, and the LLM is prompted with each question

Source: Figure 1 - Active Prompting with Chain-of-Thought for Large Language Models

Different kinds of uncertainty metrics can be used in active prompting. Diao et al. propose four different metrics:

Disagreement

Consider the set \(A = \{a_1, a_2, ..., a_k\}\) of answers to \(k\) questions. Disagreement is calculated by

\[u = \frac{h}{k}\]where \(h\) is the number of unique answers in the set.

Questions with larger disagreement values can then be selected as candidates for annotation.

Entropy

Entropy is calculated by:

\[u = \underset{i}{\mathrm{argmax}} - \sum_{j=1}^kP_\theta(a_j|q_i)\ln{P_\theta(a_j|q_i)}\]where \(P_\theta(a_j \rvert q_i)\) is the frequency of a given answer \(j\) to a question \(i\) among all answers. The value of the uncertainty \(u\) is the index \(i\) for which the negative entropy (the summation part of the equation) is maximized.

Larger entropy means greater uncertainty and smaller entropy denotes smaller uncertainty; the goal is to find the questions with larger entropy.

Variance

Variance can also be used as a kind of uncertainty metric, calculated as:

\[u = \underset{i}{\mathrm{argmax}} \frac{\sum_{j=1}^k (a_j - \bar{a})^2}{k - 1} \Bigg|_{q=q_i}\]where \(\bar{a} = \frac{1}{k}\sum_{j=1}^k a_j\).

Diao et al. hypothesize variance might be more suitable for Arabic answers.

Self-Confidence

Uncertainty is achieved by querying the language model with a pre-defined template instructing the model to classify each answer according to a pre-defined set of categories. The least confident questions are then selected by:

\[u = \underset{i}{\mathrm{argmax}}(1 - \underset{j}{\mathrm{max}}P_\theta(a_j|q_i)) = \underset{i}{\mathrm{argmin}} \ \underset{j}{\mathrm{max}}P_\theta(a_j|q_i)\]where \(P_\theta(a_j \rvert q_i)\) is a categorical variable from the pre-defined set of categories.

The authors found that the first three metrics significantly outperform self-confidence but perform comparatively well within themselves.

Let’s walk through a simple example. We start with a set \(S\) of \(n=10\) questions that may or may not be annotated:

\[S = \{q_1, q_2, ..., q_{10}\}\]We prompt the LLM \(k=5\) times for each question in \(S\) to generate possible answers with intermediate steps. This can be a zero-shot prompt, for example:

A car runs 10 kilometers with a liter of gas. A liter of gas costs $1.29. How much does it cost to drive 300 kilometers?

Or a few-shot prompt, for example:

Question: A bag of supplies lasts 5 days and costs $10. How much does it cost to buy supplies for a 30-day trip?

Answer: A bag lasts 5 days. The trip lasts 30 days. 30/5 = 6, so 6 bags are needed. Each bag costs $10. 6 bags times $10 = $60. The answer is $60.

Question: A car runs 11 kilometers with a liter of gas. A liter of gas costs $1.29. How much does it cost to drive 300 kilometers?

For each run of the prompt, we get an answer, resulting in a set \(A_i\) of \(k\) answers for each question \(q_i\) of index \(i\):

\[A_i = \{a_1, a_2, a_3, a_4, a_5\}\]Let’s assume the set of answers for \(q_1\) is \(A = \{38.70, 38, 38.70, 38.70, 39\}\). Using disagreement as the uncertainty metric, we calculate it by taking the number of unique answers \(h=3\) and dividing it by the number of answers \(k=5\). The uncertainty measure of this set of answers is \(u = \frac{3}{5} = 0.6\). The process is repeated for all answer sets, resulting in a set of uncertainty metrics \(U\), one metric for each question:

\[U = \{u_1, u_2, ..., u_{10}\}\]where \(u_1\) is the uncertainty measure of the answer set for \(q_1\).

We can now fetch the questions with the highest uncertainty. This can be done based on different criteria, such as taking the \(n\) questions with the highest uncertainty or taking all questions with uncertainty higher than \(x\). This creates a set of \(p\) questions that meet the criteria and need to be annotated.

The set of selected questions is annotated by humans. The annotated examples are pre-pended to the questions following the CoT template, and the LLM can be prompted with each question.

For more details on the approach and experiments, check out the Active Prompting with Chain-of-Thought for Large Language Models paper by Diao et al.

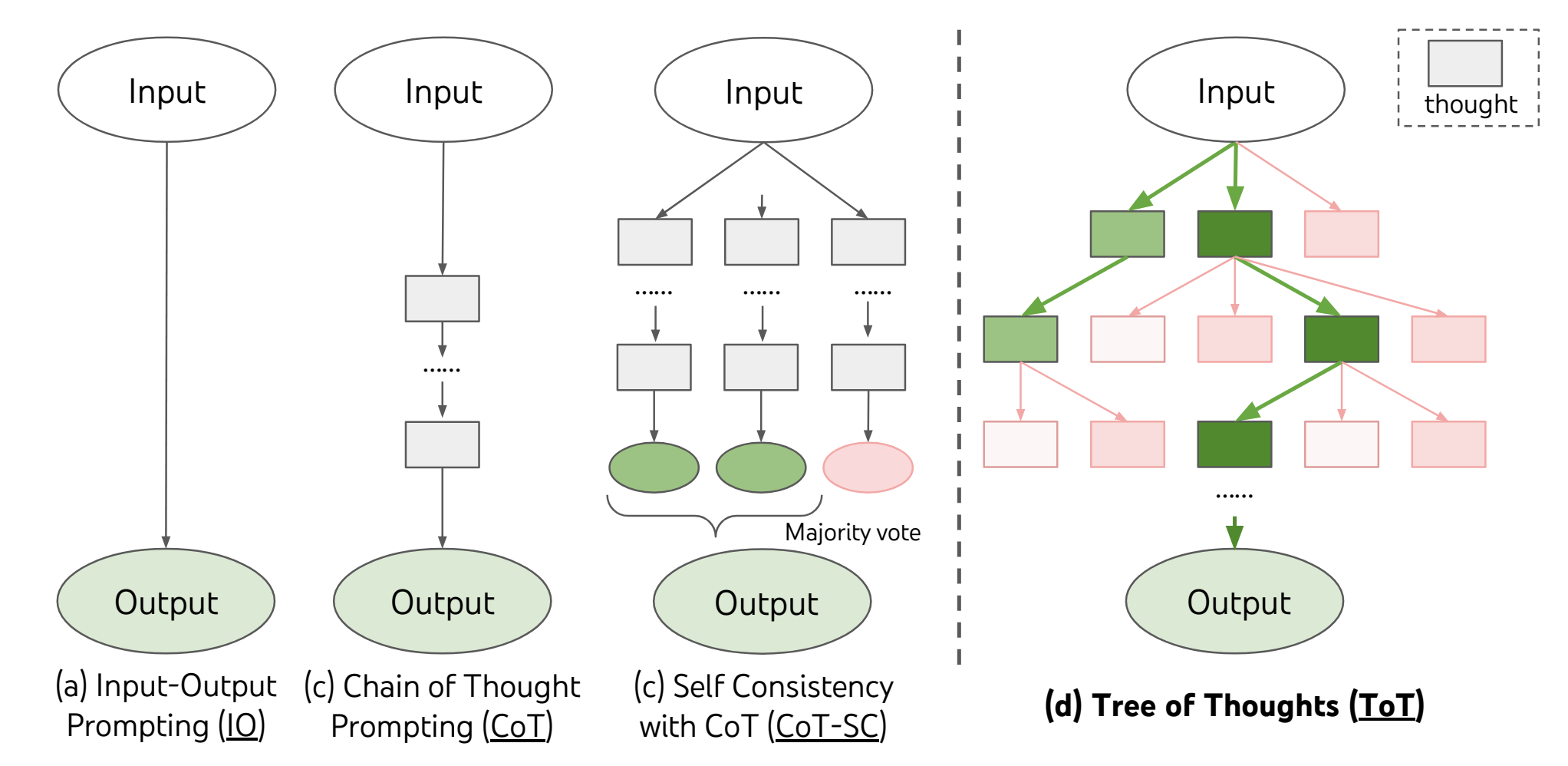

Tree of Thoughts (ToT)

The Three of Thoughts approach enhances a language model’s ability to problem-solve and perform more complex tasks by enabling LLMs to explore different reasoning paths over “thoughts” (coherent units of text). In the CoT approach, the LLM progresses linearly in its “reasoning” towards problem-solving. As such, if an error occurs along the way, they will tend to proceed. ToT proposed an alternative approach where the LLM evaluates itself at each node (or state) of thought, allowing it to stop inefficient approaches early and switch to alternative methods.

The problem is framed as a search over a tree, with a specific instantiation of ToT answering four questions, as stated by Yao et al.:

- How to decompose the intermediate process into thought steps

- How to generate potential thoughts from each state

- How to heuristically evaluate states

- What search algorithm to use

Source: Figure 1 - Tree of Thoughts: Deliberate Problem Solving with Large Language Models

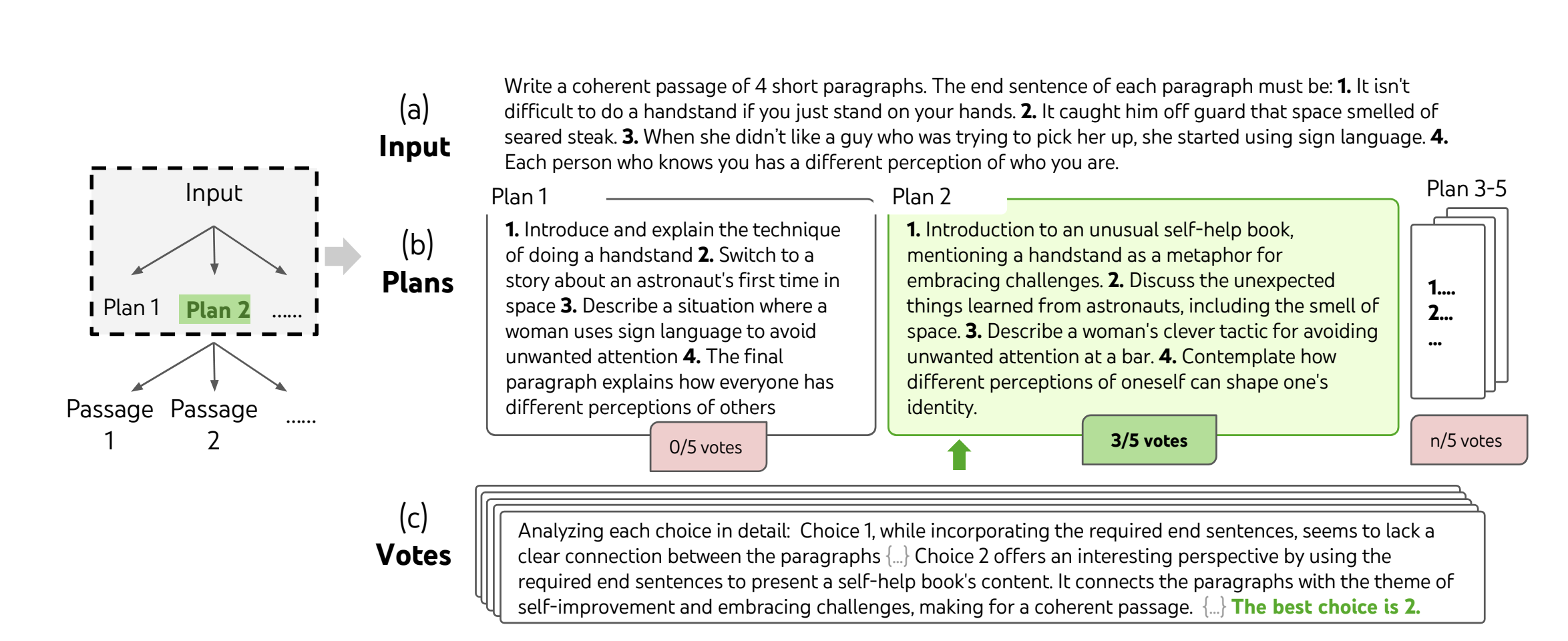

Let’s walk through a creative writing example outlined in the original paper by Yao et al.:

Source: Figure 4 - Tree of Thoughts: Deliberate Problem Solving with Large Language Models

A typical CoT prompt for a creative writing task would look like:

Input: Use these two sentences to write two paragraphs, each ending in one of the provided sentences: [TWO SENTENCES]

Output: [TWO PARAGRAPHS ENDING IN THE TWO SENTENCES]

Input: Use these four sentences to write four paragraphs, each ending in one of the provided sentences: [FOUR SENTENCES]

As you can see, it samples the thoughts without explicit decomposition of steps, and the results on this kind of task aren’t as effective with CoT. The implementation with ToT would entail:

The ToT approach leverages the problem’s properties to design and decompose intermediate thought steps. A thought should be “small” enough that the language model can generate good, diverse samples but “big” enough that it can evaluate the thought’s prospect toward problem-solving.

For this same task, we’d instead start by prompting the LLM to create \(n\) plans to accomplish the task. For this example, let’s consider \(n=5\):

I have a creative writing problem. I need to write a coherent passage of four paragraphs, each ending in one of these sentences [FOUR SENTENCES]. Brainstorm five distinct step by step plans to accomplish this task. Consider factors such as [LIST OF FACTORS TO CONSIDER].

The output would be a set of \(n=5\) plans to accomplish the task. We then get the LLM to vote on each plan to accomplish the task and assign a measure of confidence in the plan’s success:

For each of the five proposed solutions to accomplish this task, evaluate their potential and give a probability of success and confidence in the result. Consider these factors when evaluating each option: [LIST OF FACTORS TO CONSIDER]

Now we have a best-rated plan, and prompt the LLM to generate \(k\) different passages following that plan. For this stage, let’s assume \(k=3\):

Following this step-by-step plan [BEST VOTED PLAN] write 3 different passages that are four paragraphs long each. The end sentence of each paragraph must be, in this order: [FOUR SENTENCES]

Finally, the language model votes on the best passage:

Analyze the choices below and conclude which one is the most promising one given the instruction.

And the best voted is our result.

This is a simple implementation with one branch. The approach can be expanded to handle increasingly complex tasks and yield more refined results.

Conclusion

Prompt engineering techniques go far beyond the typical input-output prompt and can be very powerful and effective for various tasks. Even in scenarios where other techniques to enhance LLMs are required, prompt engineering will feature either as part of intermediate steps (RAG, for example) or to elicit desired outputs (interacting with a fine-tuned model, for example).

Looking to leverage generative AI in your business? Let’s talk!

This blog post is part of a series with our friends over at Shift Interactive . Stay tuned for more!