Artificial Intelligence

Takeaways from NERCOMP 2025 with a focus on AI in Higher Ed (Part 2)

Introduction

I recently attended NERCOMP for the first time and I got to connect with IT professionals in the higher ed space. This is the second article in a series collecting my personal takeaways from some of the most interesting AI-related sessions at the conference.

The first article in the series was about local AI in higher ed . This one is about a case study about account provisioning with AI at UCLA.

The Future of Account Provisioning with AI at UCLA

Anna Ahearn and Krithik Udayashankar from UCLA IT Services presented a solution that leaned heavily on custom GPTs to optimize account provisioning at different departments of their institution.

Problem

UCLA’s provisioning workflows are complex, inconsistent, and poorly documented. This causes delays, risk, and common mistakes when onboarding/offboarding people to their systems.

Vision

The team had a vision of an AI chatbot using custom GPTs to provide accurate answers to access-related questions, reduce administrative overhead, and improve compliance and efficiency.

Their vision of a solution streamlined workflows and addressed common challenges in managing access provisioning across departments.

Solution

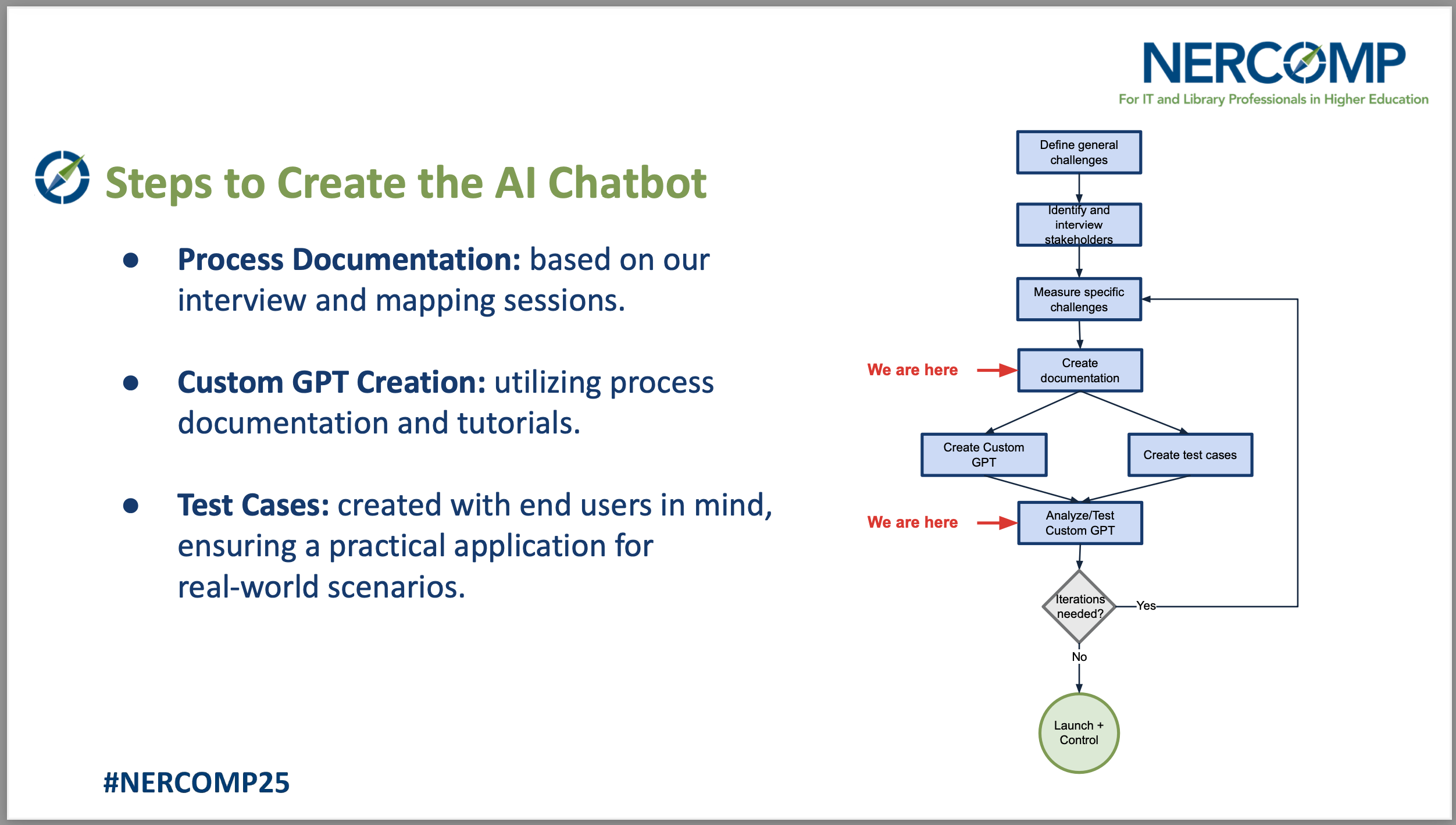

The team conducted stakeholder interviews to process-map current workflows and identify key challenges. They then developed a domain-specific GPT chatbot tailored to address real scenarios, such as providing guidance on requesting access to the Bruin Financial Aid system or updating a user’s role.

This approach ensured the chatbot could deliver accurate, context-aware responses to streamline access provisioning tasks.

For the implementation, the team decided to use OpenAI’s custom GPTs feature.

Ethical Commitments

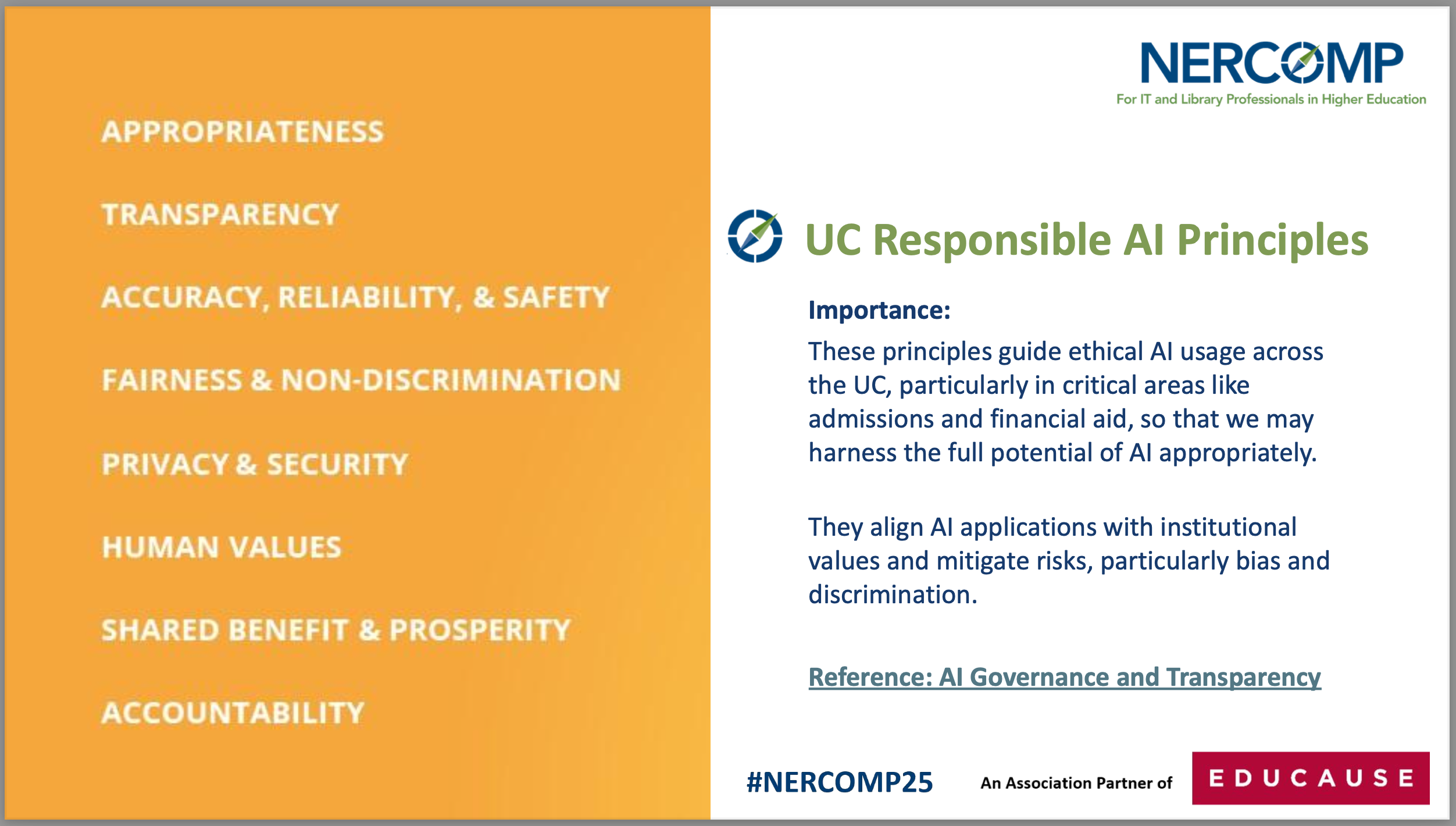

From the very beginning, the team prioritized adherence to UCLA’s Responsible AI Principles :

-

Appropriateness: The potential benefits and risks of AI and the needs and priorities of those affected should be carefully evaluated to determine whether AI should be applied or prohibited.

-

Transparency: Individuals should be informed when AI-enabled tools are being used.

-

Accuracy, Reliability, and Safety: AI-enabled tools should be effective, accurate, and reliable for the intended use and verifiably safe and secure throughout their lifetime.

-

Fairness and Non-Discrimination: AI-enabled tools should be assessed for bias and discrimination.

-

Privacy and Security: AI-enabled tools should be designed in ways that maximize privacy and security of persons and personal data.

-

Human Values: AI-enabled tools should be developed and used in ways that support the ideals of human values, such as human agency and dignity, and respect for civil and human rights.

-

Shared Benefit and Prosperity: AI-enabled tools should be inclusive and promote equitable benefits (e.g., social, economic, environmental) for all.

-

Accountability: The University of California should be held accountable for its development and use of AI systems in service provision in line with the above principles.

They emphasized the importance of stakeholders understanding and trusting the system.

Co-creation was a key focus, which pushed the team to involve collaboration with diverse groups to shape the solution.

Additionally, they worked diligently to mitigate bias, recognizing its potential impact on the effectiveness and fairness of the AI system.

Future Outlook

The team envisions a future where their solution incorporates predictive access assignments, enabling the system to anticipate user needs based on patterns and roles.

They aim to implement dynamic access control, allowing permissions to adjust in real time as circumstances change.

At the same time, they aim to have a solution with automated deprovisioning that will streamline the removal of access when it is no longer needed, reducing risks and administrative overhead.

Additionally, they plan to leverage AI for adaptive cybersecurity, ensuring robust protection that evolves to counter emerging threats.

Final Thoughts

In reflecting on UCLA’s approach, it’s clear to me that the success of AI-powered solutions in higher ed depends on more than just technical innovation. The human factors of trust, bias, and usability remain central to these solutions.

Systems must be designed not only to save people’s time, but also to empower the people who rely on them every day.

When stakeholders are involved in shaping solutions, and when transparency and ethical commitments are prioritized, users are more likely to trust and adopt new tools.

Ultimately, AI should make processes easier and faster, but it must also be easy to understand to those it serves.

The most impactful systems are those that enhance human capabilities, foster collaboration, and build confidence.

At the same time, higher ed organizations don’t need highly customized Generative AI solutions to solve real problems.

For publicly available content, where copyright is not a big concern, custom GPTs on top of OpenAI can work in a cost-effective way and reduce time to market for your solution.